About Webcomics and Automating the Wrong Thing Link to heading

The 8th of May is the Webcomic Day. Last year to celebrate I posted my own sci-fi mini-comic that explores our reliance on automation of daily tasks. This year, I wanted to share a personal reflection on why some automation is not adopted as widely as one would think. More precisely, why don’t visual artists like generative AI?

As a computer scientist by trade and an amateur artist, I would love to see technology support the visual arts. That pertains to webcomics in particular. Webcomics are usually free to read. They are published online, one or a few pages per week. Whether by individuals or small groups, they are time consuming to create . While a great treat, this means that if a page ends on a cliffhanger, the readers have a whole week to agonise over what might happen next! Wouldn’t it be great to speed up webcomic production with AI?

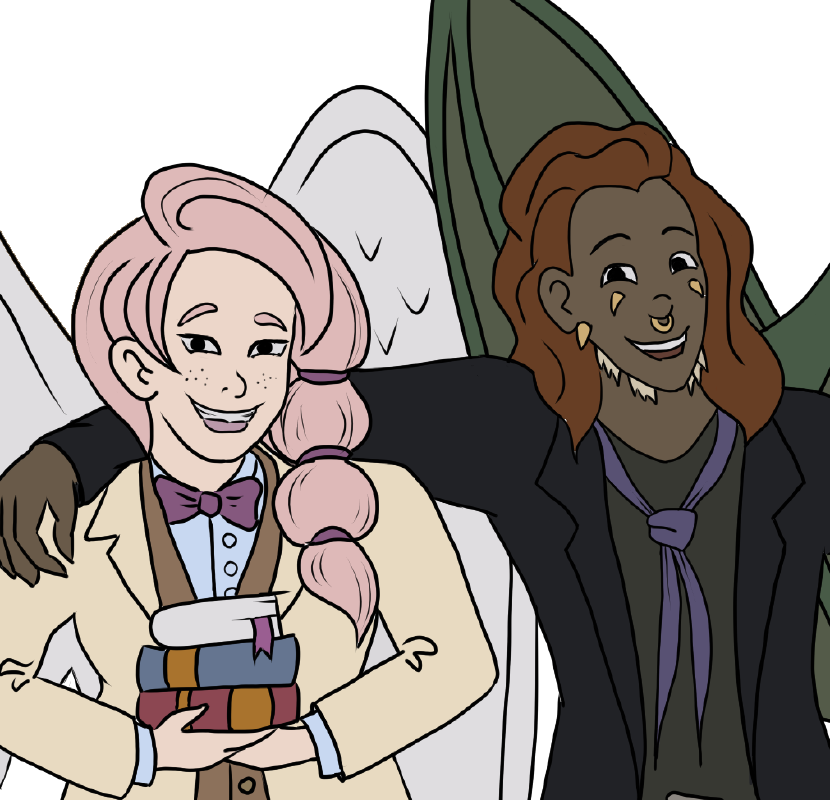

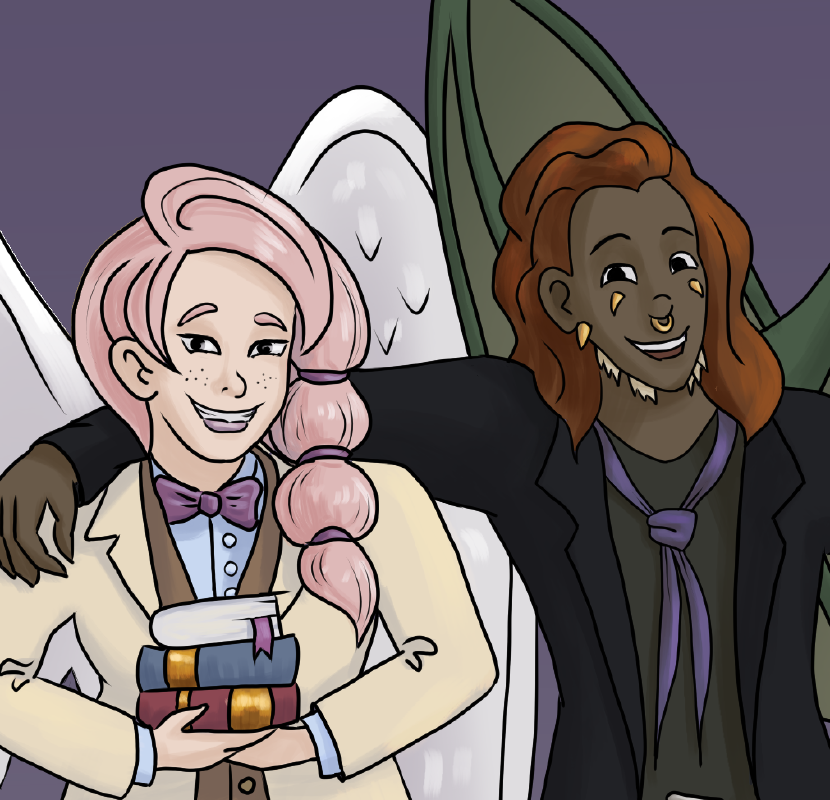

A few years back, I thought it would be a great project for a master’s student (spoiler: it wasn’t but the student in question did great anyway). My thinking was this: can we identify a step in comic making that is repetitive and not very satisfying, and then automate it? After scouting some forums, I identified “flat colouring” as a potential candidate step; for comics with lineart, the colouring is roughly split into two stages. Stage one is called “flat colouring”: blocking areas with their base colour and shading. Stage two involves adding lights, shadows and effects.

Here linearts, flat colouring and final picture are illustrated with my fan-art of characters from Sombulus.

Given that often the flat colours do not change much between pages, and the same character is usually coloured the same way, one would think that some sort of object recognition or classification would greatly speed up this step. Sure, as one of my comic-making idols pointed out, people also fix mistakes in the lineart at this stage, but that makes it only a more interesting challenge!

Well, it turns out that Convolutional Neural Networks, especially the pre-trained ones that our brave student had to work with, do not work well with black-and-white, clean lines. There are no good datasets, and the fake lineart created for training similar projects seems to be almost intentionally grey-scale and messy; counterintuitively, such messy line-arts are easier for Neural Networks (NNs) to interpret. What is more, flat colours are also not a natural thing for networks to produce. Neural Networks are great at working with textures and patterns of photos, but not with simply black lines (let me plug G’MIC, a non-AI framework that works with linearts and flat colours).

Since then, AI tools for creating art have improved dramatically. Dall-E was trending last June, and Stable-Diffusion has been popular since September. They still suffer from the same problem that the old Neural Networks did: they do what they are good at, but not what artists need them to do. They replace the creative part of art, instead supporting the laborious and repetitive parts of the process.

I believe that this is one of the reasons visual artists are so strongly opposed to AI art, when writers are less opposed to GPT-like writing tools, or programmers are more friendly to Github Copilot. Note that in all three cases, there are copyright issues raised; all these models are trained on data collected from the internet before anyone thought how to licence it for AI use. Yet, this issue is raised more often in the case of AI art. Could the difference be that large language models can support the writer while generative art models can only compete with artists?

As a computer scientist by trade and an amateur artist, I would love to fix this divide, but I also worry that it is too late for visual artists to embrace AI art even if it’s focused on what the market actually wants. We should be careful not to make this mistake in the decision-making space: we need decision-support tools, not decision-making tools.

PS,

If you are interested in the legal discussion of AI art, there are many excellent pieces, but I bet you haven’t read this one yet: Legal and moral issues of AI creativity.